Background

A few weeks ago I decided to replace my ageing and bloated Drupal 7 blog. I decided on the following criteria that the solution had to meet:

- The project git repo must be private.

- Hosting infrastructure had to be under my control and completely codified.

- The solution should require very little supporting infrastructure such as databases.

- Deployment of changes to the site or infrastructure must be automated.

These requirements immediately ruled out a few options including GitHub Pages and SaaS blogging platforms like wordpress.org.

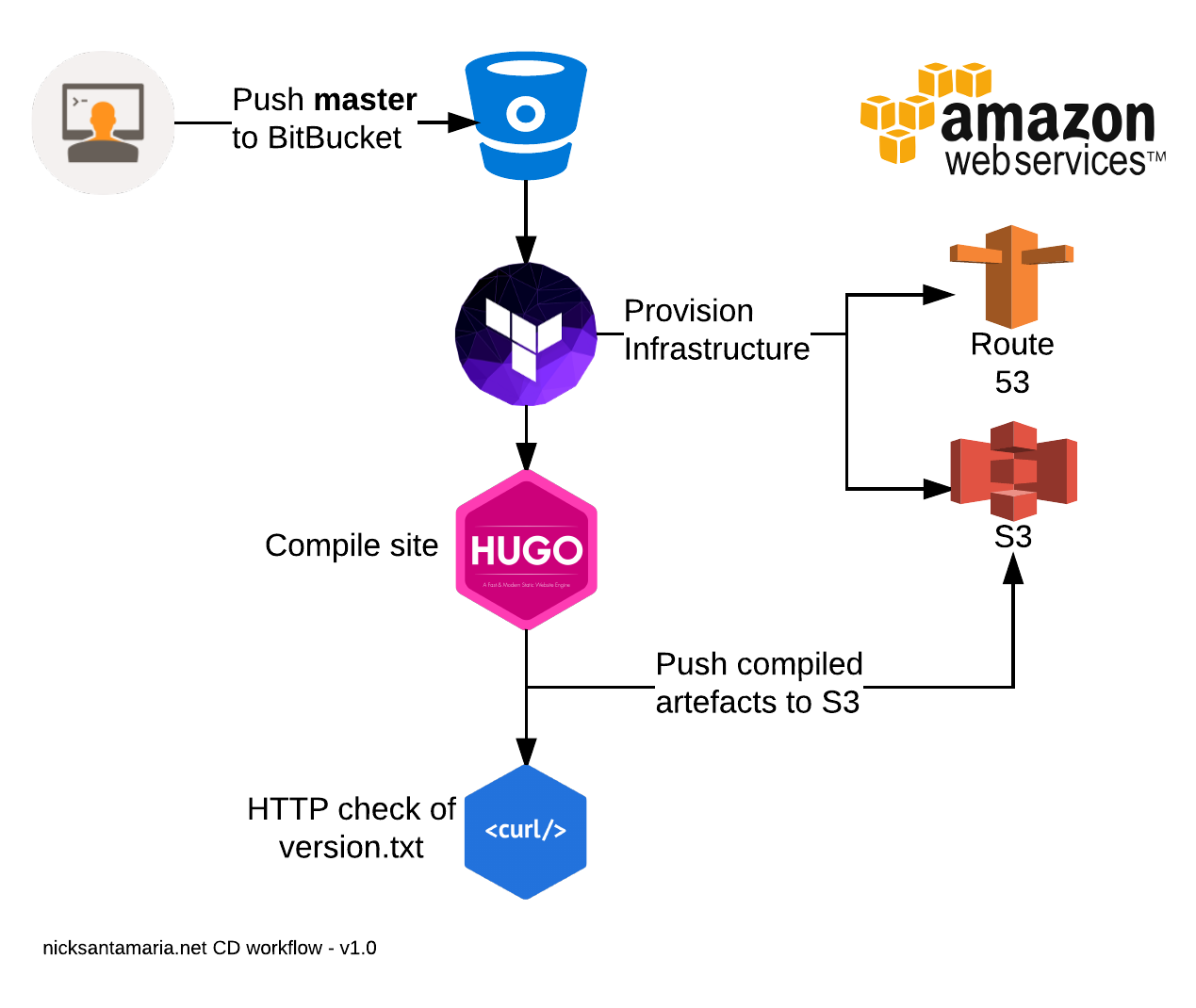

In the end I decided on the following architecture:

- BitBucket for free private repo hosting.

- BitBucket Pipelines for CI/CD pipeline.

- Hugo for static site generation.

- AWS S3 for static web hosting.

- AWS Route 53 for DNS.

- Terraform for infrastructure management.

Repository Structure

To ensure simplicity in workflow, I wanted everything to be codified within the main project repo:

- The Hugo site source code.

- The Terraform templates.

- The BitBucket Pipelines build configuration.

Below is the structure I ended up with.

nicksantamaria.net

├── bitbucket-pipelines.yml <-- Pipeline configuration

├── hugo <-- Hugo site source files

│ ├── config.yml

│ ├── content

│ ├── public

│ ├── static

│ └── themes

└── terraform <-- Terraform templates

├── main.tf

└── variables.tf

Terraform Configuration

There are a few AWS resources that are required to host the site on S3.

aws_s3_bucket to host the website files.

resource "aws_s3_bucket" "www" {

bucket = "www.nicksantamaria.net"

acl = "public-read"

force_destroy = true

policy = <<EOF

{

"Version":"2012-10-17",

"Statement":[{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action":["s3:GetObject"],

"Resource":[

"arn:aws:s3:::www.nicksantamaria.net/*"

]

}]

}

EOF

website {

index_document = "index.html"

error_document = "404.html"

}

}

aws_route53_record CNAME record for www.nicksantamaria.net

resource "aws_route53_record" "www" {

zone_id = "XXXX"

name = "www.nicksantamaria.net"

type = "CNAME"

ttl = "300"

records = ["${aws_s3_bucket.www.website_domain}"]

}

aws_s3_bucket for the apex domain redirect.

resource "aws_s3_bucket" "apex" {

bucket = "nicksantamaria.net"

acl = "public-read"

force_destroy = true

policy = <<EOF

{

"Version":"2012-10-17",

"Statement":[{

"Sid":"PublicReadGetObject",

"Effect":"Allow",

"Principal": "*",

"Action":["s3:GetObject"],

"Resource":["arn:aws:s3:::nicksantamaria.net/*"]

}]

}

EOF

website {

redirect_all_requests_to = "www.nicksantamaria.net"

}

}

aws_route53_record A ALIAS record for nicksantamaria.net

resource "aws_route53_record" "apex" {

zone_id = "XXXX"

name = "nicksantamaria.net"

type = "A"

alias {

name = "${aws_s3_bucket.apex.website_domain}"

zone_id = "${aws_s3_bucket.apex.hosted_zone_id}"

evaluate_target_health = false

}

}

If you want to adapt these for your own site:

- Replace ‘nicksantamaria.net’ with your desired domain.

- Replace zone_id = ‘XXXX’ with the zone ID of your route 53 hosted zone.

Continuous Delivery Configuration

I chose BitBucket Pipelines to handle the CD pipeline due to its tight integration with BitBucket, plus it was a great opportunity to evaluate its suitability for future projects.

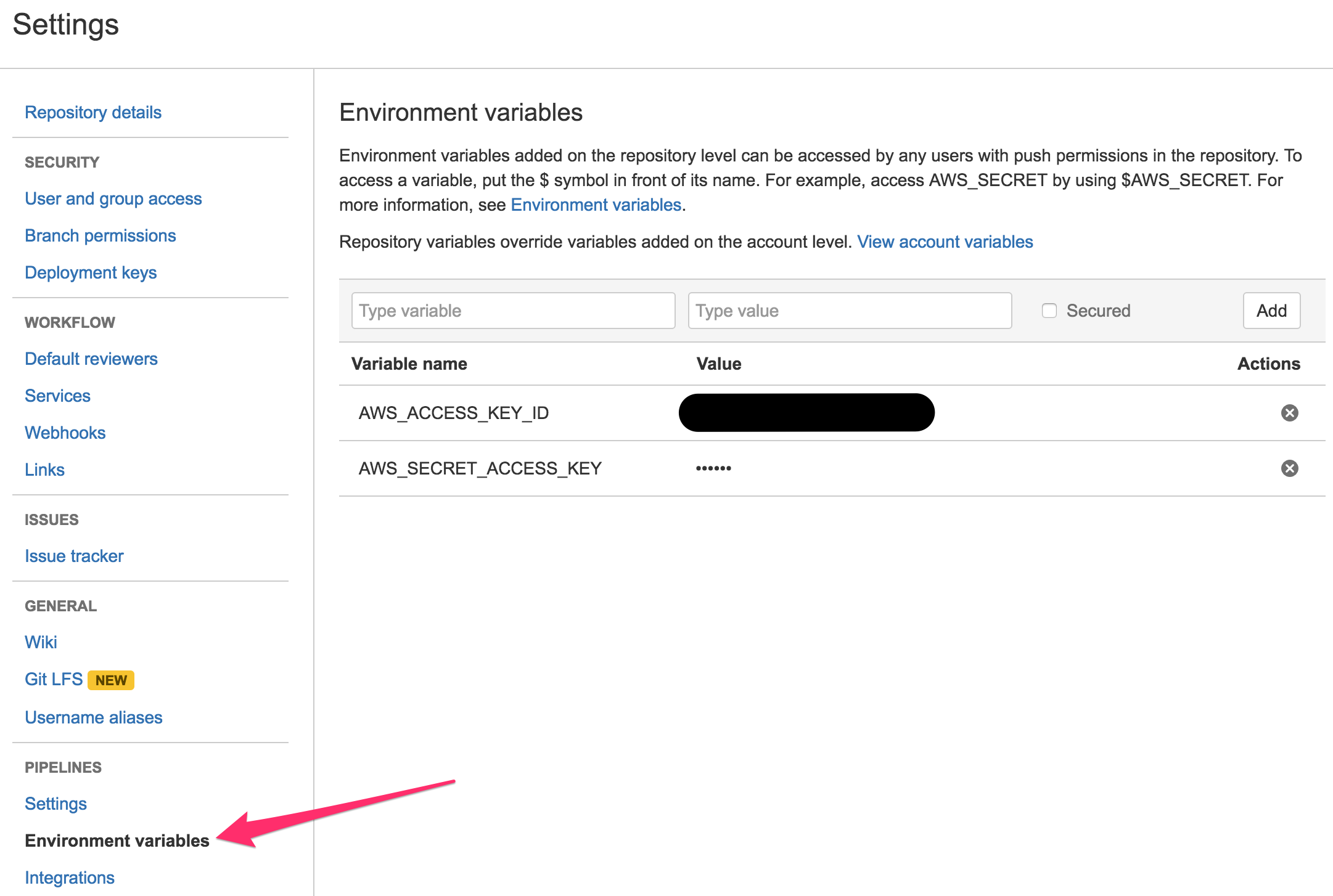

The first thing I needed to do was set up AWS credentials in BitBucket Pipelines. This is configured in the Repository configuration page in BitBucket.

Each build had to execute the following tasks:

- Validate Terraform templates.

- Provision Terraform resources.

- Compile Hugo site.

- Sync compiled artefact to S3.

Here is this workflow visualised -

Here is the bitbucket-pipelines.yml file I created to achieve this.

image: golang:1.7

pipelines:

branches:

# master is the production branch.

master:

- step:

script:

#

# Setup dependencies.

#

- mkdir -p ~/bin

- cd ~/bin

- export PATH="$PATH:/root/bin"

- apt-get update && apt-get install -y unzip python-pip

# Dependency: Hugo.

- wget https://github.com/spf13/hugo/releases/download/v0.17/hugo_0.17_Linux-64bit.tar.gz

- tar -vxxzf hugo_0.17_Linux-64bit.tar.gz

- mv hugo_0.17_linux_amd64/hugo_0.17_linux_amd64 hugo

# Dependency: Terraform.

- wget https://releases.hashicorp.com/terraform/0.7.8/terraform_0.7.8_linux_amd64.zip

- unzip terraform_0.7.8_linux_amd64.zip

# Dependency: AWS CLI

- pip install awscli

#

# Provision Terraform resources.

#

- cd ${BITBUCKET_CLONE_DIR}/terraform

# Ensure Terraform syntax is valid before proceeding.

- terraform validate

# Fetch remote state from S3 bucket.

- terraform remote config -backend=s3 -backend-config="bucket=tfstate.nicksantamaria.net" -backend-config="key=prod.tfstate" -backend-config="region=ap-southeast-2"

# Ensure this step passes so that the state is always pushed.

- terraform apply || true

- terraform remote push

#

# Compile site.

#

- cd ${BITBUCKET_CLONE_DIR}/hugo

- hugo --destination ${BITBUCKET_CLONE_DIR}/_public --baseURL http://www.nicksantamaria.net --verbose

# Create a file containing the build version.

- echo "${BITBUCKET_COMMIT}" > ${BITBUCKET_CLONE_DIR}/_public/version.txt

#

# Deploy site to S3.

#

- aws s3 sync --delete ${BITBUCKET_CLONE_DIR}/_public/ s3://www.nicksantamaria.net/ --grants read=uri=http://acs.amazonaws.com/groups/global/AllUsers

# Test the live version matches this build.

- curl -s http://www.nicksantamaria.net/version.txt | grep ${BITBUCKET_COMMIT}

Notes

There are a few things worth explaining in more detail.

Dependency setup

The first section of the pipeline is installing dependencies for the rest of the build. I plan on improving this by creating a custom docker image which has all these utilities pre-installed - this would reduce the build time from 2 minutes to 30 seconds.

Terraform remote state

To ensure that the terraform state is preserved between pipelines runs, the state file is stored in a S3 bucket called tfstate.nicksantamaria.net. I created this bucket manually (rather than with Terraform) to ensure there is no risk of the bucket being unintentionally destroyed during a terraform apply.

Version checking

After Hugo compiles the site, an additional file called version.txt is placed into the docroot. This file contains the git commit hash (from $BITBUCKET_COMMIT environment variable). The very last command in the pipeline makes a HTTP request to this file, and ensures the response matches the expected version string.

Conclusion

I am really happy with the end result which achieved all of goals I set out in the beginning.

BitBucket Pipelines is a brand-new service, and had some key features missing compared to competitors like TravisCI and CircleCI.

- Environment variable definition in the build config file.

- Separation of concerns between setup, test and deployment phases of the build.

- Ability to have a subset of build steps shared between branches.

There are a few improvements I plan on making.

- Add CloudFront as a CDN.

- Use a custom docker image for the CD builds to reduce build time.